Continuously Deploying Mirage Unikernels to Google Compute Engine using CircleCI

Trying to blow the buzzword meter with that title...

Note of Caution!

This never made it quite 100% of the way, it was blocked largely on account of me not being able to get the correct version of the dependencies to install in CI. Bits and pieces of this may still be useful for others though, so I'm putting this up in case it helps out.

Also, I really like the PIC bug, it tickles me how far down the stack that ended up being. It may be the closest I ever come to being vaguely involved (as in having stumbled across, not having diagnosed/fixed) in something as interesting as Dave Baggett's hardest bug ever

Feel free to ping me on the OCaml discourse, though I'll likely just point you at the more experienced and talented people who helped me put this all together (in particular Martin Lucin, an absurdly intelligent and capable OG hacker and a driving force behing Solo5).

Topics

- What are unikernels?

- What's MirageOS?

- Public hosting for unikernels

- AWS

- GCE

- DeferPanic

- Why GCE?

- Problems

- Xen -> KVM (testing kernel output via QEMU)

- Bootable disk image

- Virtio problems

- DHCP lease

- TCP/IP stack

- Crashes

- Deployment

- Compiling an artifact

- Initial deploy script

- Zero-downtime instance updates

- Scaling based on CPU usage (how cool are the GCE suggestions to downsize an under-used image?)

- Custom deployment/infrastructure with Jitsu [1]

Continuously Deploying Mirage Unikernels to Google Compute Engine using CircleCI

Or "Launch your unikernel-as-a-site with a zero-downtime rolling updates, health-check monitors that'll restart an instance if it crashes every 30 seconds, and a load balancer that'll auto-scale based on CPU usage with every git push"

This post talks about achieving a production-like deploy pipeline for a publicly-available service built using Mirage, specifically using the fairly amazing Google Compute Engine infrastructure. I'll talk a bit about the progression to the current setup, and some future platforms that might be usable soon.

What are unikernels?

Unikernels are specialised, single-address-space machine images constructed by using library operating systems.

Easy! ...right?

The short, high-level idea is that unikernels are the equivalent of opt-in operating systems, rather than opt-out-if-you-can-possibly-figure-out-how.

For example, when we build a virtual machine using a unikernel, we only include the code necessary for our specific application. Don't use a block-storage device for your Heroku-like application? The code to interact with block-devices won't be run at all in your app - in fact, it won't even be included in the final virtual machine image.

And when your app is running, it's the only thing running. No other processes vying for resources, threatening to push your server over in the middle of the night even though you didn't know a service was configured to run by default.

There are a few immediately obvious advantages to this approach:

- Size: Unikernels are typically microscopic as deployable artifacts

- Efficiency: When running, unikernels only use the bare minimum of what your code needs. Nothing else.

- Security: Removing millions of lines of code and eliminating the inter-process protection model from your app drastically reduces attack surface

- Simplicity: Knowing exactly what's in your application, and how it's all running considerably simplifies the mental model for both performance and correctness

What's MirageOS?

MirageOS is a library operating system that constructs unikernels for secure, high-performance network applications across a variety of cloud computing and mobile platforms

Mirage (which is a very clever name once you get it) is a library to build clean-slate unikernels using OCaml. That means to build a Mirage unikernel, you need to write your entire app (more or less) in OCaml. I've talked quite a bit now about why OCaml is pretty solid, but I understand if some of you run away screaming now. No worries, there are other approaches to unikernels that may work better for you[2]. But as for me and my house, we will use Mirage.

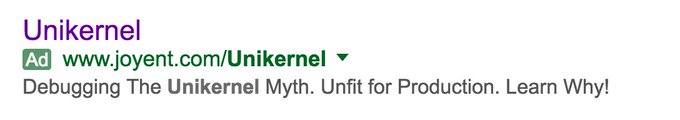

There are some great talks that go over some of the cool aspects of Mirage in much more detail [3][4], but it's unclear if they're actually usable in any major way. There are even companies that take out ads against unikernels, highlighting many of the ways in which they're (currently) unsuitable for production:

Bit weird, that.

But I suspect that bit by bit this will change, assuming sufficient elbow grease and determination on our parts. So with that said, let's roll up our sleeves and figure out one of the biggest hurdles to using unikernels in production today: deploying them!

Public hosting for unikernels

Having written our app as a unikernel, how do we get it up and running in a production-like setting? I've used AWS fairly heavily in the past, so it was my initial go-to for this site.

AWS runs on the Xen hypervisor, which is the main non-unix target Mirage was developed for. In theory, it should be the smoothest option. Sadly, the primitives and API that AWS expose just don't match well. The process is something like this:

- Download the AWS command line tools

- Start an instance

- Create, attach, and partition an EBS volume (we'll turn this into an AMI once we get our unikernel on it)

- Copy the Xen unikernel over to the volume

- Create the GRUB entries... blablabla

- Create a snapshot of the volume ohmygod

- Register your AMI using the

pv-grubkernel id what was I doing again - Start a new instance from the AMI

Unfortunately #3 means that we need to have a build machine that's on the AWS network so that we can attach the volume, and we need to SSH into the machine to do the heavy lifting. Also, we end up with a lot of left over detritus - the volume, the snapshot, and the AMI. It could be scripted at some point though.

GCE to the rescue!

GCE is Google's public computing offering, and I currently can't recommend it highly enough. The per-minute pricing model is a much better match for instances that boot in less than 100ms, the interface is considerably nicer and offers the equivalent REST API call for most actions you take, and the primitives exposed in the API mean we can much more easily deploy a unikernel. Win, win, win!

GCE Challenges

Xen -> KVM

There is a big potential show-stopper though: GCE uses the KVM hypervisor instead of Xen, which is much, much nicer, but not supported by Mirage as of the beginning of this year. Luckily, some fairly crazy heroes (Dan Williams, Ricardo Koller, and Martin Lucina, specifically) stepped up and made it happen with Solo5!

Solo5 Unikernel implements a unikernel base, or the lowest layer of code inside a unikernel, which interacts with the hardware abstraction exposed by the hypervisor and forms a platform for building language runtimes and applications. Solo5 currently interfaces with the MirageOS ecosystem, enabling Mirage unikernels to run on either Linux KVM/QEMU

I highly recommend checking out a replay of the great webinar the authors gave on the topic https://developer.ibm.com/open/solo5-unikernel/ It'll give you a sense of how much room for optimization and cleanup there is as our hosting infrastructure evolves.

Now that we have KVM kernels, we can test them locally fairly easily using QEMU, which shortens the iterations while we dealt with teething on the new platform. The

Bootable disk image

This was just on the other side of my experience/abilities, personally. Constructing a disk image that would boot a custom (non-Linux) kernel isn't something I've done before, and I struggled to remember how the pieces fit together. Once again, @mato came to the rescue with a lovely little script that does exactly what we need, no muss, no fuss.

Virtio driver

Initially we had booting unikernels that printed to the serial console just fine, but didn't seem to get any DHCP lease. The unikernel was sending DHCP discover broadcasts, but not getting anything in return, poor lil' fella. I then tried with a hard-coded IP literally configured at compile time, and booted an instance on GCE with a matching IP, and still nothing. Nearly the entire Mirage stack is in plain OCaml though, including the TCP/IP stack, so I was able to add in plenty of debug log statements and see what was happening. Finally tracked everything down to problems with the Virtio implementation, quoting @ricarkol:

The issue was that the vring sizes were hardcoded (not the buffer length as I mentioned above). The issue with the vring sizes is kind of interesting, the thing is that the virtio spec allows for different sizes, but every single qemu we tried uses the same 256 len. The QEMU in GCE must be patched as it uses 4096 as the size, which is pretty big, I guess they do that for performance reasons. - @ricarkol

I tried out the fixes, and we had a booting, publicly accessible unikernel! However, it was extremely slow, with no obvious reason why. Looking at the logs however, I saw that I had forgotten to remove a ton of logging per-frame. Careful what you wish for with accessibility, I guess!

Position-independent Code

This was a deep rabbit hole. The

bug manifested as Fatal error: exception (Invalid_argument "equal: abstract value"), which

seemed strange since the site worked on Unix and Xen backends, so

there shouldn't have been anything logically wrong with the OCaml

types, despite what the exception message hinted at. Read

this comment

for the full, thrilling detective work and explanation, but a

simplified version seems to be that portions of the OCaml/Solo5 code

were placed in between the bootloader and the entry point of the

program, and the bootloader zero'd all the memory in-between (as it

should) before handing control over to our program. So eventually our

program did some comparison of values, and a portion of the value had

at compile/link time been relocated and destroyed, and OCaml threw the

above error.

Crashes

Finally, we have a booting, non-slow, publicly-accessible Mirage instance running on GCE! Great! However, every ~50 http requests, it panics and dies:

[11] serving //104.198.15.176/stylesheets/normalize.css.

[12] serving //104.198.15.176/js/client.js.

[13] serving //104.198.15.176/stylesheets/foundation.css.

[10] serving //104.198.15.176/images/sofuji_black_30.png.

[10] serving //104.198.15.176/images/posts/riseos_error_email.png.

PANIC: virtio/virtio.c:369

assertion failed: "e->len <= PKT_BUFFER_LEN"

Oh no! However, being a bit of a kludgy-hacker desperate to get a stable unikernel I can show to some friends, I figured out a terrible workaround: GCE offers fantastic health-check monitors that'll restart an instance if it crashes because of a virtio (or whatever) failure every 30 seconds. Problem solved, right? At least I don't have restart the instance personally...

And that was an acceptable temporary fix until @ricarkol was once again able to track down the cause of the crashes and fix things up that had to do with some GCE/Virtio IO buffer descriptor wrinkle:

The second issue is that Virtio allows for dividing IO requests in multiple buffer descriptors. For some reason the QEMU in GCE didn't like that. While cleaning up stuff I simplified our Virtio layer to send a single buffer descriptor, and GCE liked it and let our IOs go through - @ricarkol

So now Solo5 unikernels seem fairly stable on GCE as well! Looks like it's time to wrap everything up into a nice deploy pipeline.

Deployment

With the help of the GCE support staff and the Solo5 authors, we're now able to run Mirage apps on GCE. The process in this case looks like this:

- Compile our unikernel

- Create a tar'd and gzipped bootable disk image locally with our unikernel

- Upload said disk image (should be ~1-10MB, depending on our contents. Right now this site is ~6.6MB)

- Create an image from the disk image

- Trigger a rolling update

Importantly, because we can simply upload bootable disk images, we don't need any specialized build machine, and the entire process can be automated!

One time setup

We'll create two abstract pieces that'll let us continually deploy and scale: An instance group, and a load balancer.

Creating the template and instance group

First, two quick definitions...

Managed instance groups:

A managed instance group uses an instance template to create identical instances. You control a managed instance group as a single entity. If you wanted to make changes to instances that are part of a managed instance group, you would apply the change to the whole instance group.

And templates:

Instance templates define the machine type, image, zone, and other instance properties for the instances in a managed instance group.

We'll create a template with

FINISH THIS SECTION(FIN)

Setting up the load balancer

Honestly there's not much to say here, GCE makes this trivial. We simply say what class of instances we want (vCPU, RAM, etc.), what the trigger/threshold to scale is (CPU usage or request amount), and the image we want to boot as we scale out.

In this case, I'm using a fairly small instance with the instance group we just created, and I want another instance whenever we sustained CPU usage over 60% for more than 30 seconds:

`PUT THE BASH CODE TO CREATE THAT HERE`(FIN)

Subsequent deploys

The actual cli to do everything looks like this:

mirage configure -t virtio --dhcp=true \

--show_errors=true --report_errors=true \

--mailgun_api_key="<>" \

--error_report_emails=sean@bushi.do

make clean

make

bin/unikernel-mkimage.sh tmp/disk.raw mir-riseos.virtio

cd tmp/

tar -czvf mir-riseos-01.tar.gz disk.raw

cd ..

# Upload the file to Google Compute Storage

# as the original filename

gsutil cp tmp/mir-riseos-01.tar.gz gs://mir-riseos

# Copy/Alias it as *-latest

gsutil cp gs://mir-riseos/mir-riseos01.tar.gz \

gs://mir-riseos/mir-riseos-latest.tar.gz

# Delete the image if it exists

y | gcloud compute images delete mir-riseos-latest

# Create an image from the new latest file

gcloud compute images create mir-riseos-latest \

--source-uri gs://mir-riseos/mir-riseos-latest.tar.gz

# Updating the mir-riseos-latest *image* in place will mutate the

# *instance-template* that points to it. To then update all of

# our instances with zero downtime, we now just have to ask gcloud

# to do a rolling update to a group using said

# *instance-template*.

gcloud alpha compute rolling-updates start \

--group mir-riseos-group \

--template mir-riseos-1 \

--zone us-west1-a

Or, after splitting this up into two scripts:

export NAME=mir-riseos-1 CANONICAL=mir-riseos GCS_FOLDER=mir-riseos

bin/build_kvm.sh

gce_deploy.sh

Not too shabby to - once again - launch your unikernel-as-a-site with zero-downtime rolling updates, health-check monitors that'll restart any crashed instance every 30 seconds, and a load balancer that auto-scales based on CPU usage. The next step is to hook up CircleCI so we have continuous deploy of our unikernels on every push to master.

CircleCI

The biggest blocker here, and one I haven't been able to solve yet, is the OPAM switch setup. My current docker image has (apparently) a hand-selected list of packages and pins that is nearly impossible to duplicate elsewhere.

For an example of running existing applications (in this case nginx) as a unikernel, check out Madhuri Yechuri and Rean Griffith's talk ↩