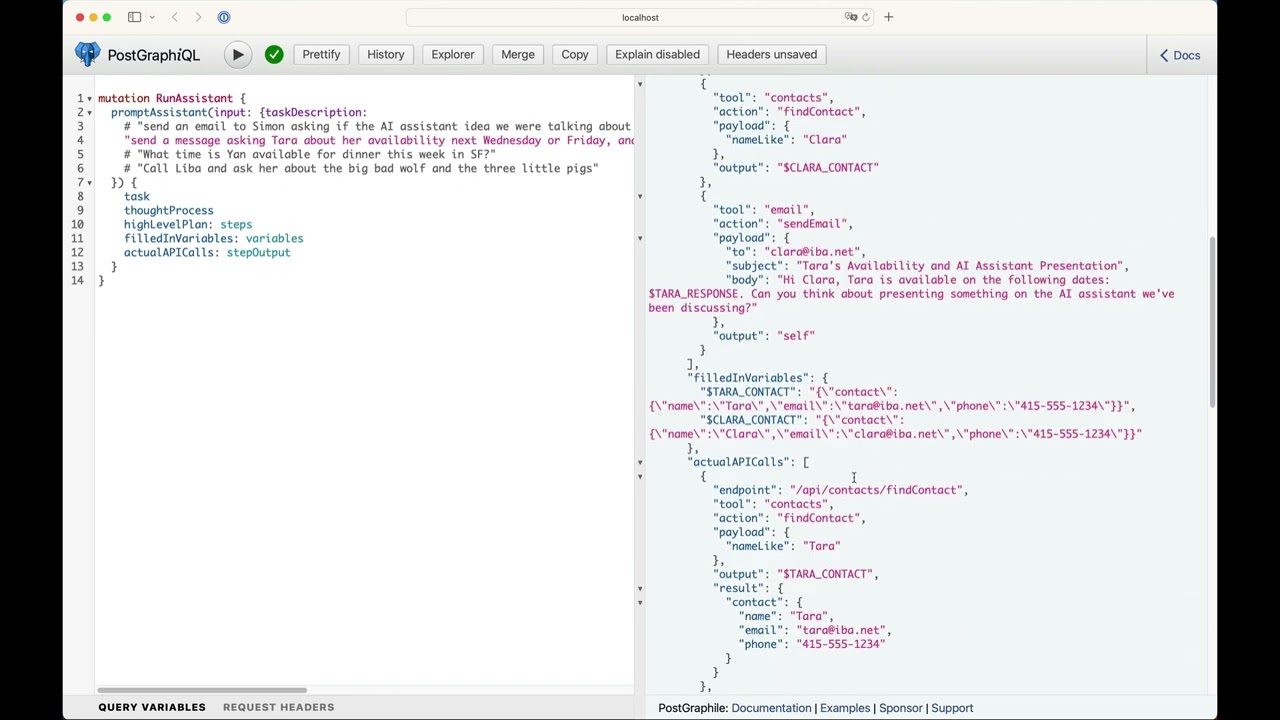

AI Agents are quite interesting right now (HuggingGPT, etc.), so I figured I’d take a crack at adapting some of the techniques and see how far it could really go.

The mocked API calls are each expecting a certain data structure to work, so this could indeed be hooked up to Twilio, Gmail, Calendar (would be great with OneGraph!) to give it actual agency.

There are lots of learnings around how to guide GPT to give much more reliable answer formats, to encourage the correct tool/action for a task, etc. Lots of low-hanging fruit to improve it as well, I think.

Then there are general questions around how to structure communication between the tools, e.g. such freeform questions as, “Schedule a meeting with Tom whenever he’s available mid-next week, but not on Thursday afternoon” == What does the resulting data structure look like for a Calendar API to actually extract availability information given this? Fun questions!

Still, it’s surprising to see how far it can go already. And if it were using a GraphQL API I suspect you could automate much/all of the structure information you send it, given the introspectable schema.

Audio-book replacement, still in wild flux: ML-rewrite public domain stories to modern versions (Shakespeare, Sherlock Holmes, etc.) with modern references/events (slightly different each time) => another ML rewriter that takes the modern version and describes a highly-detailed play version of it including dialogue with emotion, music and sound notes, etc. => a series of text-to-voice (with emphasis on emotional acting) + music + sound effects => laid out in 3d audio space. Copyright-free audio books of stories that are good (they’ve survived for a long time for a reason) made modern and happening all around you. Could be made semi-interactive in the future as well when the models are faster at real-time interaction.

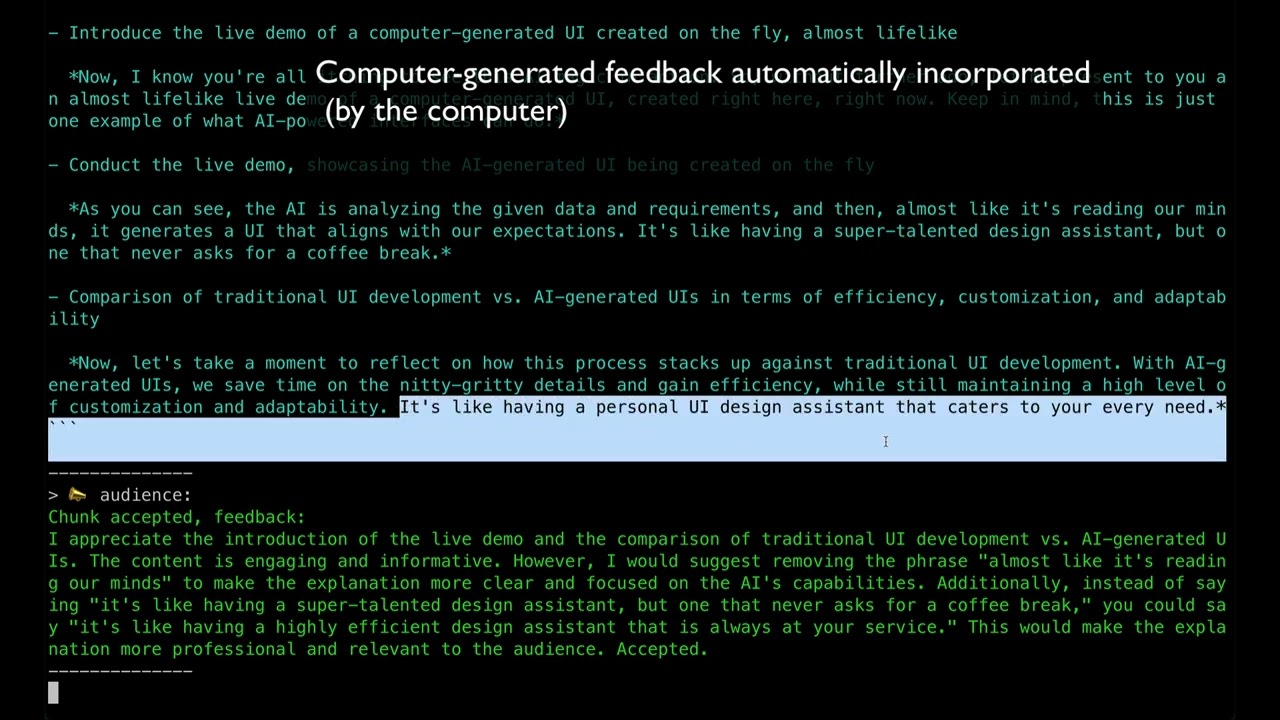

Inspired by the Reflexion paper, I built a tool that can write, edit, and speak tech talks tailored to an audience. Two bots - one an author, the other an audience - critiquing, and incorporating each other's feedback, then reading out it out in my generated voice at 2:05s.

Obvious next steps would be taking inspiration from the HuggingGPT paper and hooking it up to a few more things, then I can completely remove myself from the picture:

- Midjourney for slides?

- Deepfake for video and hand gestures?

- Uploading to YouTube?

- Writing and posting a blog post and tweet storm linking to the video?

Part of a series where I lean hard into the “make myself obsolete ASAP” role, hoping for a phoenix-like rebirth as a new, more powerful, and more useful version of myself on the other side of being automated away.

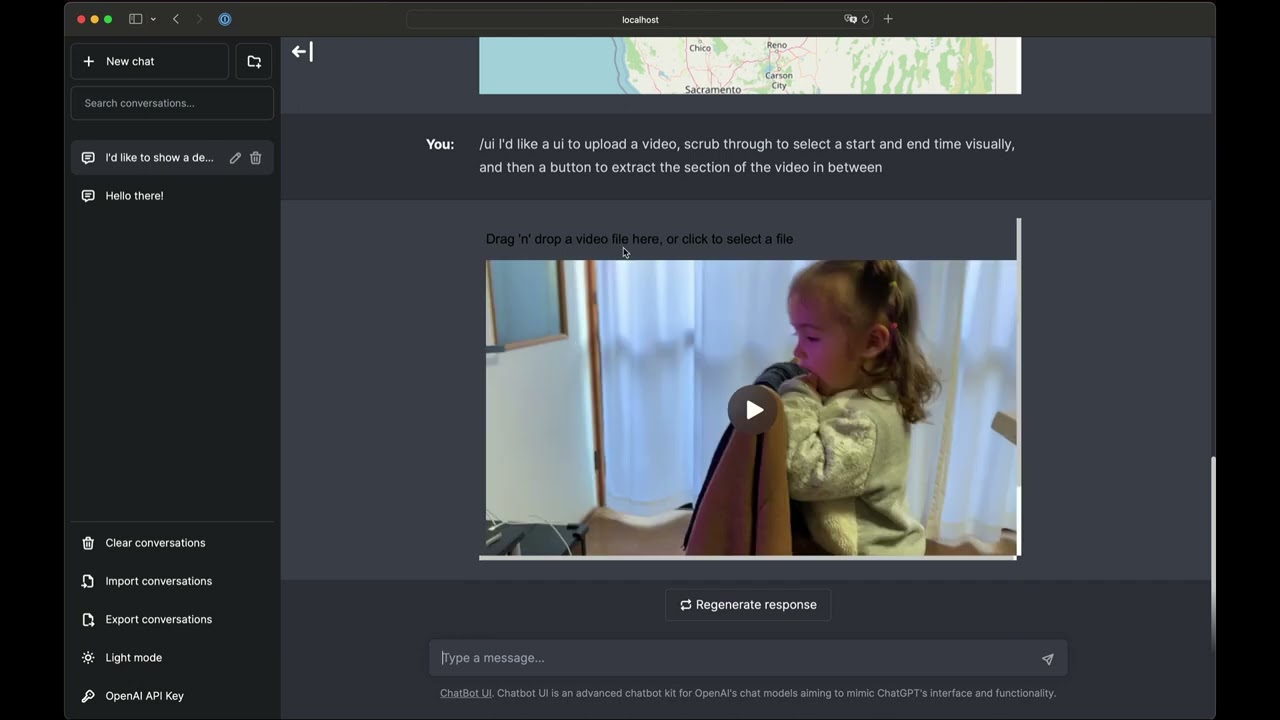

🚀Future of UI dev🔮

- ~10% fixed UIs built by hand like today

- ~40% replaced by conversational UIs

- ~50% long-tail, on-the-fly UIs generated for specific tasks, used once, then vanish.

Conjure is a new UI paradigm where UIs build themselves as ephemeral, on-demand, iterable programs that appear out of nowhere to be used, then disappear when they're no longer needed.

The high-value, artisanal UIs will be those where high-investment for even a minor edge is worth it, e.g. Bloomberg Terminal, Photoshop, etc.

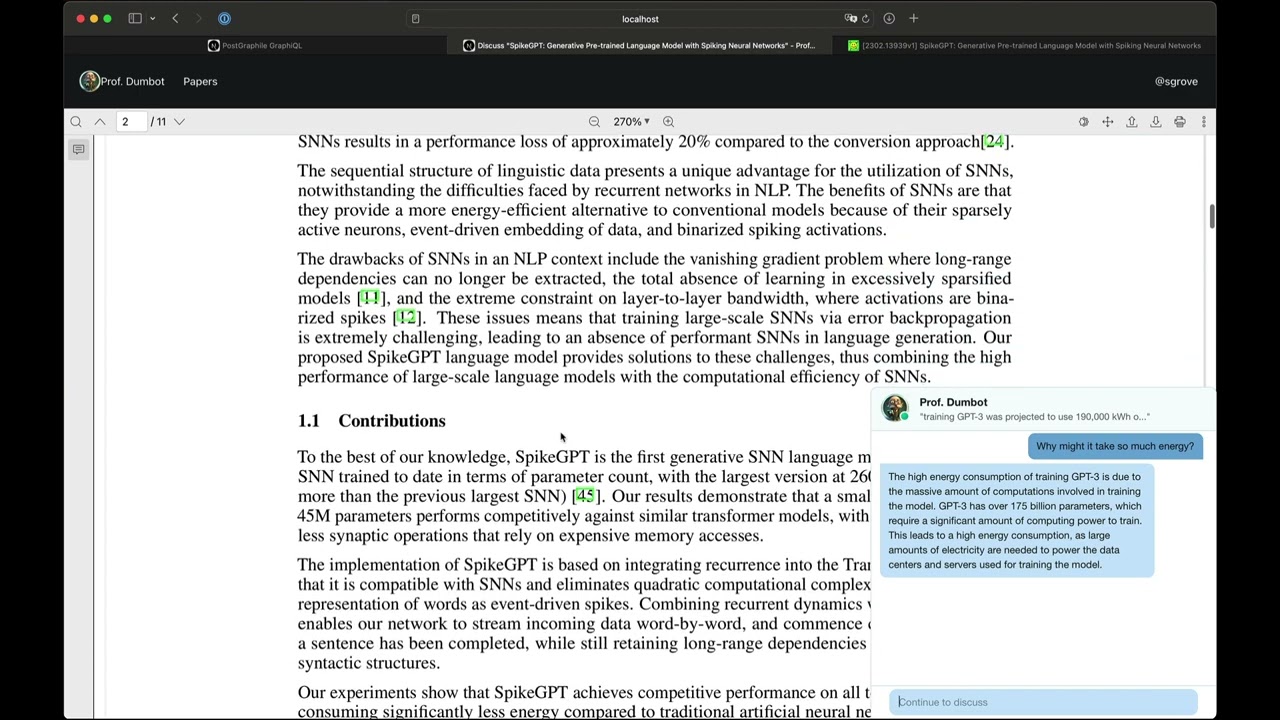

I wanted a way to ask questions interactively while reading research papers, and to track those conversations across papers down to the part of the paper that triggered the question. I added a little bit of polish on the overall experience, and it's starting to feel more and more useful

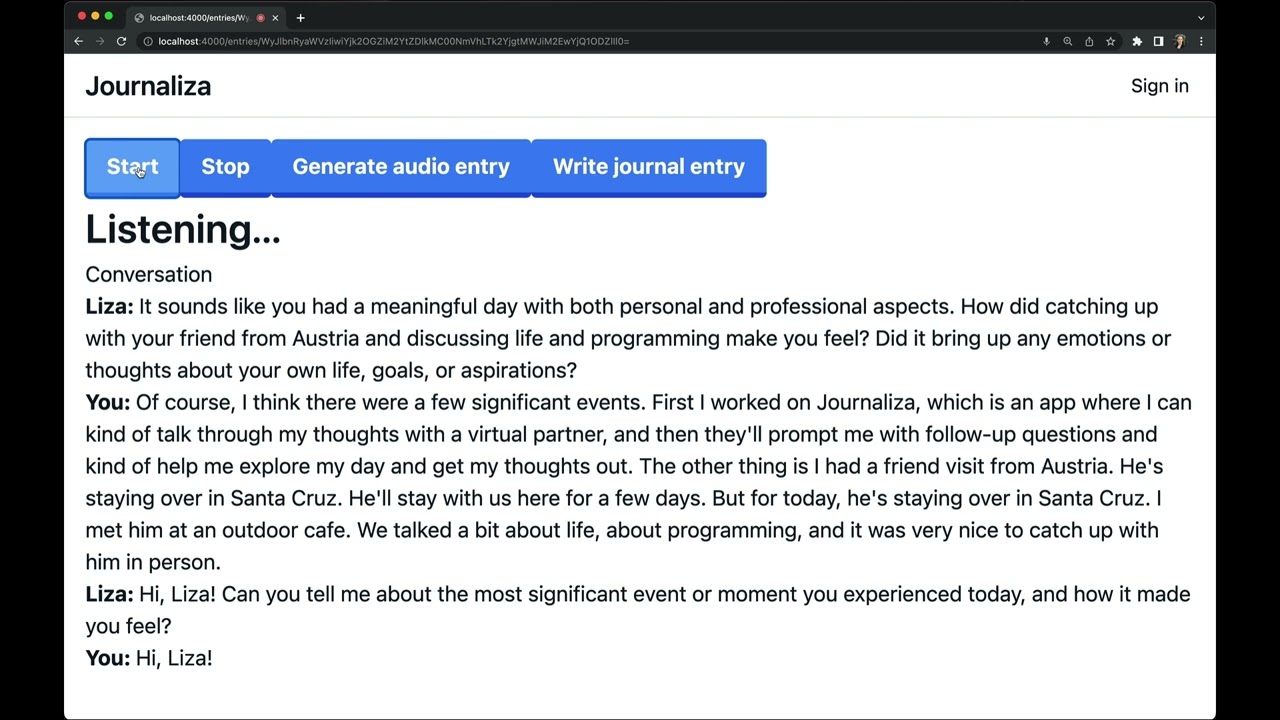

In nod to the old ELIZA program, Journaliza is a GPT guided diary to explore what’s on your mind, expand it, and write out a narrative in the form of a 1st-person journal entry

You can decompress and get out of your head while driving, sitting on the couch, going for a walk, etc.

This project has been the most personally engaging so far. The more I find myself using it, the happier I tend to be.

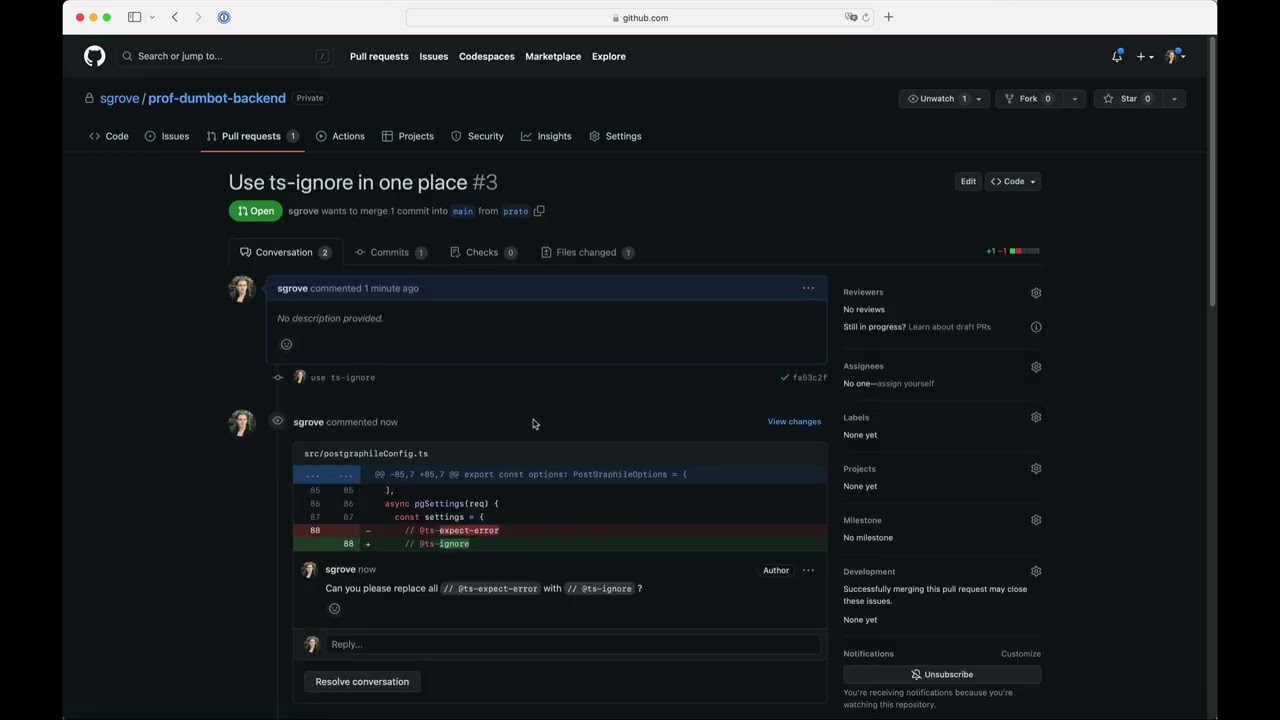

I built a pull request tool that uses GPT4 to make suggestions on GitHub PRs. When I come across a request that requires switching context, I leave a comment and the tool uses the entire file context to create a suggestion in the form of a comment that can be committed directly. This way, I can make suggestions and get a first pass at it in line inside of the PR without relying on anyone else to do the work.

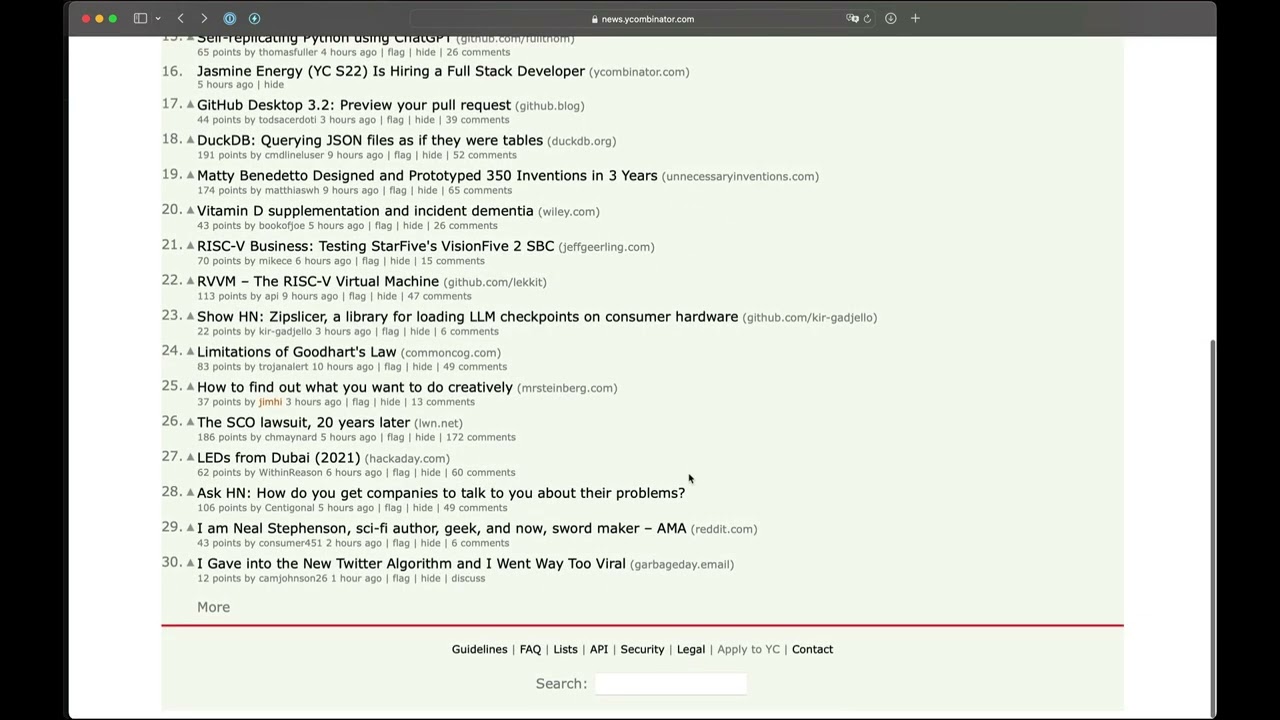

Summarize a HN discussion and extract overall sentiment and keywords automatically. The using the extracted summary + sentiment, generate an embedding and store it along with whether I liked/disliked it. Then use the embedding to determine how likely I am to enjoy other stories on the HN frontpage.

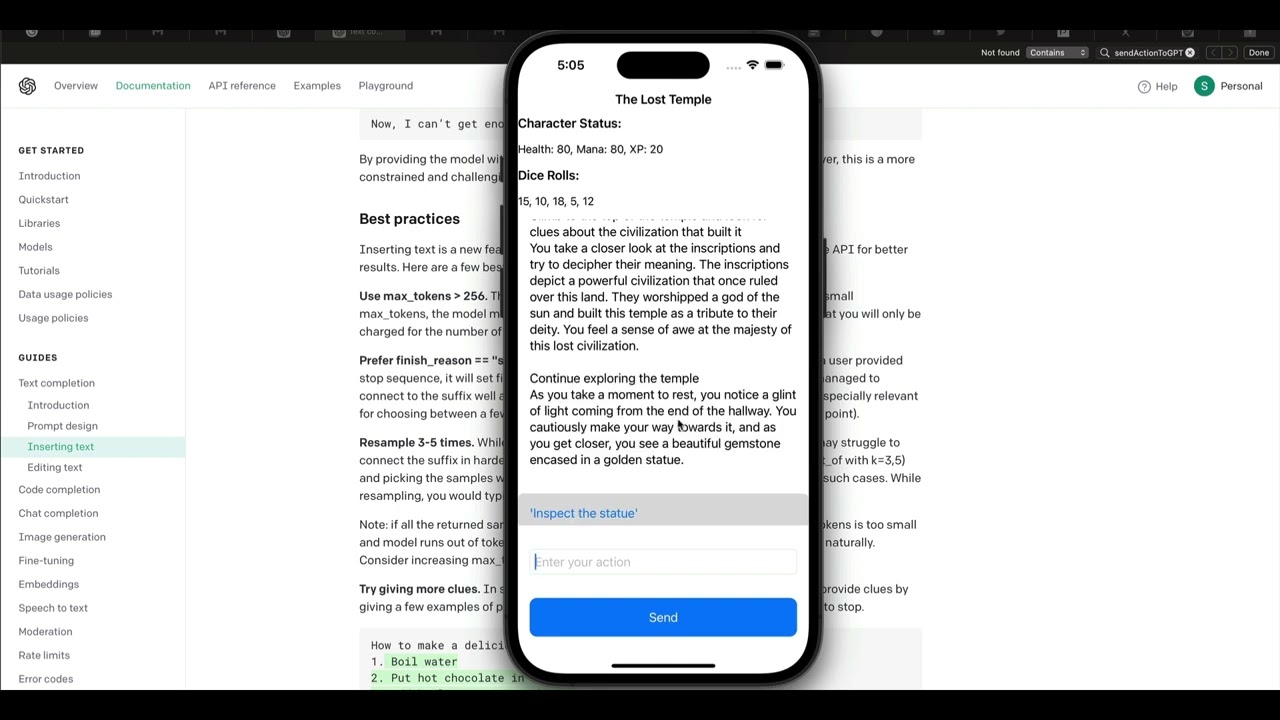

A Swift project to have a GPT-4 powered 'dungeon' story where the setting is made up on the spot, and then the user is provided with actions. The story teller tracks HP, mana, and other stats as the user decides what they want to do. At each step, a comic book image is generated for the scene description by a Stable Diffusion service (though Midjourney would be preferable). I'd like ot hook up Play.ht to have the storry narrated as well.

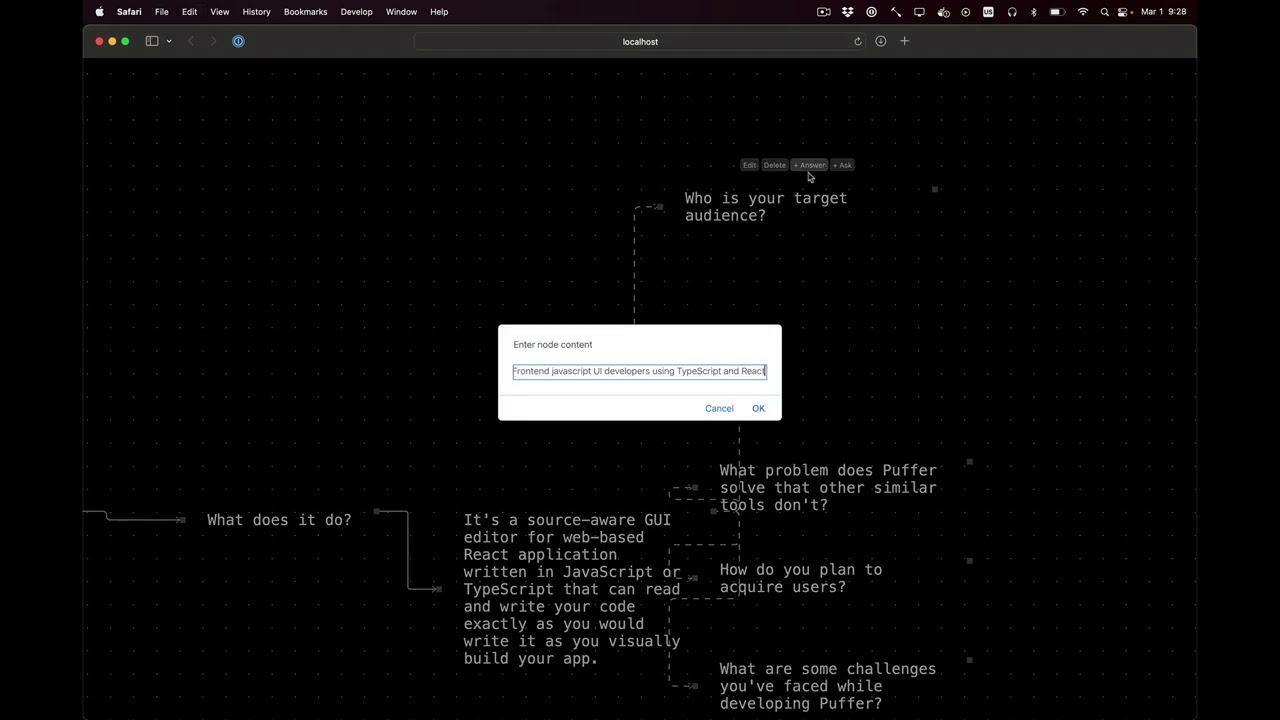

Transformed Mind is a tool I created to explore ideas that I might want to pursue as a startup, simulating how I prepare for YC interviews. Before an interview, I like to create a mind map of my startup, exploring the space to make sure I have a proper mental model but also to ensure that I don't get caught off guard by a question that I didn't think of beforehand. While making the mindmap, I can use the tool to prompt the GPT to ask me questions that I may not have thought of. As I answer questions, the tool creates a context by following up the chain, leading to better and better questions. It's a 90-minute hack that I created that made me really appreciate how powerful modern LLMs are.